网站建设分站要备案申请百度账号注册

环境:

Android 11源码

Android 11 内核源码

源码阅读器 sublime text

binder的jni方法注册

zygote启动

1-1、启动zygote进程

zygote是由init进程通过解析init.zygote.rc文件而创建的,zygote所对应的可执行程序是app_process,所对应的源文件是app_main.cpp,进程名为zygote

service zygote /system/bin/app_process -Xzygote /system/bin --zygote --start-system-serverclass mainpriority -20user rootgroup root readproc reserved_disksocket zygote stream 660 root systemsocket usap_pool_primary stream 660 root systemonrestart exec_background - system system -- /system/bin/vdc volume abort_fuseonrestart write /sys/power/state ononrestart restart audioserveronrestart restart cameraserveronrestart restart mediaonrestart restart netdonrestart restart wificondwritepid /dev/cpuset/foreground/tasks

1-2、执行app-main.cpp中的main方法

位置:frameworks/base/cmds/app_process/app_main.cppint main(int argc, char* const argv[])

{...AppRuntime runtime(argv[0], computeArgBlockSize(argc, argv));while (i < argc) {const char* arg = argv[i++];if (strcmp(arg, "--zygote") == 0) {// 将zygote标志位TRUEzygote = true;niceName = ZYGOTE_NICE_NAME;} // 运行AndroidRuntime.cpp 的strat方法if (zygote) {runtime.start("com.android.internal.os.ZygoteInit", args, zygote);} else if (className) {runtime.start("com.android.internal.os.RuntimeInit", args, zygote);} else {fprintf(stderr, "Error: no class name or --zygote supplied.\n");app_usage();LOG_ALWAYS_FATAL("app_process: no class name or --zygote supplied.");}

}AppRuntime继承自AndroidRuntime

1-3、AndroidRuntime::start

调用startReg方法来完成jni方法注册

位置:frameworks/base/core/jni/AndroidRuntime.cppvoid AndroidRuntime::start(const char* className, const Vector<String8>& options, bool zygote)

{.../* start the virtual machine,启动Android虚拟机 */JniInvocation jni_invocation;jni_invocation.Init(NULL);JNIEnv* env;if (startVm(&mJavaVM, &env, zygote, primary_zygote) != 0) {return;}.../** 注册Android的jni方法* Register android functions.*/if (startReg(env) < 0) {ALOGE("Unable to register all android natives\n");return;}...

}位置:frameworks/base/core/jni/AndroidRuntime.cpp/*

*在VM中注册Android的native方法* Register android native functions with the VM.*/

/*static*/ int AndroidRuntime::startReg(JNIEnv* env)

{ATRACE_NAME("RegisterAndroidNatives");/** This hook causes all future threads created in this process to be* attached to the JavaVM. (This needs to go away in favor of JNI* Attach calls.)*/androidSetCreateThreadFunc((android_create_thread_fn) javaCreateThreadEtc);ALOGV("--- registering native functions ---\n");/** Every "register" function calls one or more things that return* a local reference (e.g. FindClass). Because we haven't really* started the VM yet, they're all getting stored in the base frame* and never released. Use Push/Pop to manage the storage.*/env->PushLocalFrame(200);//注册jni方法if (register_jni_procs(gRegJNI, NELEM(gRegJNI), env) < 0) {env->PopLocalFrame(NULL);return -1;}env->PopLocalFrame(NULL);//createJavaThread("fubar", quickTest, (void*) "hello");return 0;

}位置:frameworks/base/core/jni/AndroidRuntime.cppstatic int register_jni_procs(const RegJNIRec array[], size_t count, JNIEnv* env)

{for (size_t i = 0; i < count; i++) {if (array[i].mProc(env) < 0) {

#ifndef NDEBUGALOGD("----------!!! %s failed to load\n", array[i].mName);

#endifreturn -1;}}return 0;

}static const RegJNIRec gRegJNI[] = {...//注册binder(jni动态注册)REG_JNI(register_android_os_Binder),REG_JNI(register_android_os_Parcel),REG_JNI(register_android_os_HidlMemory),REG_JNI(register_android_os_HidlSupport),...}

register_android_os_Binder

位置:frameworks/base/core/jni/android_util_Binder.cppint register_android_os_Binder(JNIEnv* env)

{//开始注册binderif (int_register_android_os_Binder(env) < 0)return -1;if (int_register_android_os_BinderInternal(env) < 0)return -1;if (int_register_android_os_BinderProxy(env) < 0)return -1;...

}2-1、int_register_android_os_Binder

位置:frameworks/base/core/jni/android_util_Binder.cppconst char* const kBinderPathName = "android/os/Binder";//jni动态注册

static const JNINativeMethod gBinderMethods[] = {/* name, signature, funcPtr */// @CriticalNative{ "getCallingPid", "()I", (void*)android_os_Binder_getCallingPid },// @CriticalNative{ "getCallingUid", "()I", (void*)android_os_Binder_getCallingUid },// @CriticalNative{ "isHandlingTransaction", "()Z", (void*)android_os_Binder_isHandlingTransaction },...{ "flushPendingCommands", "()V", (void*)android_os_Binder_flushPendingCommands },{ "getNativeBBinderHolder", "()J", (void*)android_os_Binder_getNativeBBinderHolder },{ "getNativeFinalizer", "()J", (void*)android_os_Binder_getNativeFinalizer },{ "blockUntilThreadAvailable", "()V", (void*)android_os_Binder_blockUntilThreadAvailable },{ "getExtension", "()Landroid/os/IBinder;", (void*)android_os_Binder_getExtension },{ "setExtension", "(Landroid/os/IBinder;)V", (void*)android_os_Binder_setExtension },

};static int int_register_android_os_Binder(JNIEnv* env)

{//根据kBinderPathName获取对应的clazz对象jclass clazz = FindClassOrDie(env, kBinderPathName);//根据clazz获取对应的mClass,保存到gBinderOffsets结构体gBinderOffsets.mClass = MakeGlobalRefOrDie(env, clazz);//根据方法名、方法签名获取方法gBinderOffsets.mExecTransact = GetMethodIDOrDie(env, clazz, "execTransact", "(IJJI)Z");//根据方法名、方法签名获取方法gBinderOffsets.mGetInterfaceDescriptor = GetMethodIDOrDie(env, clazz, "getInterfaceDescriptor","()Ljava/lang/String;");//获取属性、变量gBinderOffsets.mObject = GetFieldIDOrDie(env, clazz, "mObject", "J");//通过RegisterMethodsOrDie,将gBinderMethods数组完成映射关系,从而为java层访问jni层提供通道return RegisterMethodsOrDie(env, kBinderPathName,gBinderMethods, NELEM(gBinderMethods));

}2-2、int_register_android_os_BinderInternal和int_register_android_os_BinderProxy的流程和int_register_android_os_Binder大致差不多。

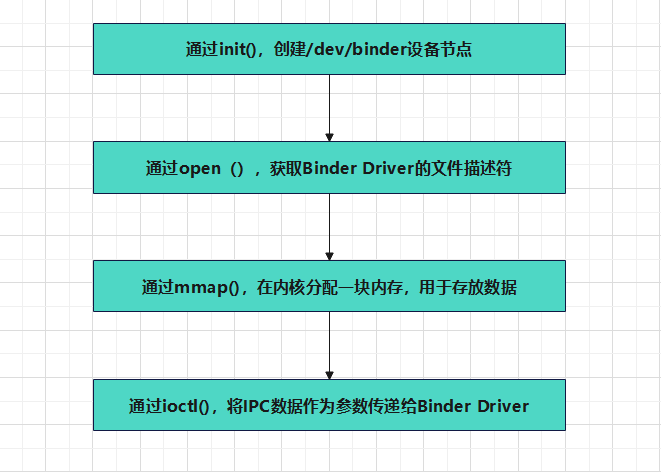

binder驱动

整体流程如下:

1、binder_init

位置:kernel/common/drivers/android/binder.c

//函数指针

device_initcall(binder_init);

//binder的设备参数集合

char *binder_devices_param = CONFIG_ANDROID_BINDER_DEVICES;static int __init binder_init(void)

{int ret;char *device_name, *device_tmp;struct binder_device *device;struct hlist_node *tmp;char *device_names = NULL;ret = binder_alloc_shrinker_init();if (ret)return ret;...// 创建一个名为"binder"的目录binder_debugfs_dir_entry_root = debugfs_create_dir("binder", NULL);if (binder_debugfs_dir_entry_root)//在binder目录下创建一个proc目录binder_debugfs_dir_entry_proc = debugfs_create_dir("proc",binder_debugfs_dir_entry_root);// 在binder目录下创建各种文件if (binder_debugfs_dir_entry_root) {...}if (!IS_ENABLED(CONFIG_ANDROID_BINDERFS) &&// binder_devices_param不为空strcmp(binder_devices_param, "") != 0) {/** Copy the module_parameter string, because we don't want to* tokenize it in-place.*/// binder_devices_param分配GFP_KERNEL大小内存device_names = kstrdup(binder_devices_param, GFP_KERNEL);if (!device_names) {ret = -ENOMEM;goto err_alloc_device_names_failed;}device_tmp = device_names;//用strsep方法循环处理CONFIG_ANDROID_BINDER_DEVICES参数,while ((device_name = strsep(&device_tmp, ","))) {ret = init_binder_device(device_name);if (ret)goto err_init_binder_device_failed;}}...

}CONFIG_ANDROID_BINDER_DEVICES在哪呢。位置:kernel/common/drivers/android/Kconfig

config ANDROID_BINDER_DEVICESstring "Android Binder devices"depends on ANDROID_BINDER_IPCdefault "binder,hwbinder,vndbinder"helpDefault value for the binder.devices parameter.The binder.devices parameter is a comma-separated list of stringsthat specifies the names of the binder device nodes that will becreated. Each binder device has its own context manager, and istherefore logically separated from the other devices.init_binder_device()

位置:kernel/common/drivers/android/binder.cstruct miscdevice {int minor;const char *name;const struct file_operations *fops;struct list_head list;struct device *parent;struct device *this_device;const struct attribute_group **groups;const char *nodename;umode_t mode;

};/*** struct binder_device - information about a binder device node* @hlist: list of binder devices (only used for devices requested via* CONFIG_ANDROID_BINDER_DEVICES)* @miscdev: information about a binder character device node* @context: binder context information* @binderfs_inode: This is the inode of the root dentry of the super block* belonging to a binderfs mount.*/

struct binder_device {struct hlist_node hlist;struct miscdevice miscdev;struct binder_context context;struct inode *binderfs_inode;refcount_t ref;

};static int __init init_binder_device(const char *name)

{int ret;//binder设备的结构体,保存相关的设备信息struct binder_device *binder_device;//为binder设备分配内存binder_device = kzalloc(sizeof(*binder_device), GFP_KERNEL);if (!binder_device)return -ENOMEM;//初始化设备 相关信息binder_device->miscdev.fops = &binder_fops;binder_device->miscdev.minor = MISC_DYNAMIC_MINOR;binder_device->miscdev.name = name;refcount_set(&binder_device->ref, 1);binder_device->context.binder_context_mgr_uid = INVALID_UID;binder_device->context.name = name;mutex_init(&binder_device->context.context_mgr_node_lock);//mics注册ret = misc_register(&binder_device->miscdev);if (ret < 0) {kfree(binder_device);return ret;}// 将当前的设备信息结构体binder_devices,放在hlist链表的表头hlist_add_head(&binder_device->hlist, &binder_devices);return ret;

}2.binder_open

位置:kernel/common/drivers/android/binder.c//binder_proc结构体,描述binder进程及其他的一些相关信息

struct binder_proc {struct hlist_node proc_node;//进程节点struct rb_root threads;//binder_thread红黑树的根节点struct rb_root nodes;//binder_node红黑树的根节点struct rb_root refs_by_desc; // binder_ref红黑树的根节点(以handle为key)struct rb_root refs_by_node; // binder_ref红黑树的根节点(以ptr为key)struct list_head waiting_threads;int pid; //相应进程idstruct task_struct *tsk;const struct cred *cred;struct hlist_node deferred_work_node;int deferred_work;int outstanding_txns;bool is_dead;bool is_frozen;bool sync_recv;bool async_recv;wait_queue_head_t freeze_wait;struct list_head todo;struct binder_stats stats;struct list_head delivered_death;int max_threads;int requested_threads;int requested_threads_started;int tmp_ref;struct binder_priority default_priority;struct dentry *debugfs_entry;struct binder_alloc alloc;struct binder_context *context;spinlock_t inner_lock;spinlock_t outer_lock;struct dentry *binderfs_entry;bool oneway_spam_detection_enabled;

};static int binder_open(struct inode *nodp, struct file *filp)

{struct binder_proc *proc, *itr;struct binder_device *binder_dev;struct binderfs_info *info;struct dentry *binder_binderfs_dir_entry_proc = NULL;bool existing_pid = false;//为binder_proc分配内存空间proc = kzalloc(sizeof(*proc), GFP_KERNEL);if (proc == NULL)return -ENOMEM;spin_lock_init(&proc->inner_lock);spin_lock_init(&proc->outer_lock);//获取当前进程的task_structget_task_struct(current->group_leader);//将当前进程的task_struct保存到binder_proc的task_structproc->tsk = current->group_leader;proc->cred = get_cred(filp->f_cred);//初始化binder_proc的todo工作列表INIT_LIST_HEAD(&proc->todo);//初始化binder_proc的等待处理所有未完成事务的进程的等待队列init_waitqueue_head(&proc->freeze_wait);...refcount_inc(&binder_dev->ref);proc->context = &binder_dev->context;//初始化proc->allocbinder_alloc_init(&proc->alloc);binder_stats_created(BINDER_STAT_PROC);//proc->pid进程pidproc->pid = current->group_leader->pid;//初始化进程的已分发的死亡通知列表INIT_LIST_HEAD(&proc->delivered_death);//初始化进程的等待进程列表INIT_LIST_HEAD(&proc->waiting_threads);//将binder_proc与filp关联起来,这样下次通过filp(文件)就能找到binder_proc了,filp->private_data = proc;...}3.binder_mmap

位置:kernel/common/drivers/android/binder.c/** This struct describes a virtual memory area. There is one of these* per VM-area/task. A VM area is any part of the process virtual memory* space that has a special rule for the page-fault handlers (ie a shared* library, the executable area etc).*/

// 虚拟内存区域结构体

struct vm_area_struct {/* The first cache line has the info for VMA tree walking. */unsigned long vm_start; /* Our start address within vm_mm. */unsigned long vm_end; /* The first byte after our end addresswithin vm_mm. *//* linked list of VM areas per task, sorted by address */struct vm_area_struct *vm_next, *vm_prev;struct rb_node vm_rb;/** Largest free memory gap in bytes to the left of this VMA.* Either between this VMA and vma->vm_prev, or between one of the* VMAs below us in the VMA rbtree and its ->vm_prev. This helps* get_unmapped_area find a free area of the right size.*/unsigned long rb_subtree_gap;/* Second cache line starts here. */struct mm_struct *vm_mm; /* The address space we belong to. *//** Access permissions of this VMA.* See vmf_insert_mixed_prot() for discussion.*/pgprot_t vm_page_prot;unsigned long vm_flags; /* Flags, see mm.h. *//** For areas with an address space and backing store,* linkage into the address_space->i_mmap interval tree.** For private anonymous mappings, a pointer to a null terminated string* in the user process containing the name given to the vma, or NULL* if unnamed.*/union {struct {struct rb_node rb;unsigned long rb_subtree_last;} shared;const char __user *anon_name;};/** A file's MAP_PRIVATE vma can be in both i_mmap tree and anon_vma* list, after a COW of one of the file pages. A MAP_SHARED vma* can only be in the i_mmap tree. An anonymous MAP_PRIVATE, stack* or brk vma (with NULL file) can only be in an anon_vma list.*/struct list_head anon_vma_chain; /* Serialized by mmap_lock &* page_table_lock */struct anon_vma *anon_vma; /* Serialized by page_table_lock *//* Function pointers to deal with this struct. */const struct vm_operations_struct *vm_ops;/* Information about our backing store: */unsigned long vm_pgoff; /* Offset (within vm_file) in PAGE_SIZEunits */struct file * vm_file; /* File we map to (can be NULL). */void * vm_private_data; /* was vm_pte (shared mem) */#ifdef CONFIG_SWAPatomic_long_t swap_readahead_info;

#endif

#ifndef CONFIG_MMUstruct vm_region *vm_region; /* NOMMU mapping region */

#endif

#ifdef CONFIG_NUMAstruct mempolicy *vm_policy; /* NUMA policy for the VMA */

#endifstruct vm_userfaultfd_ctx vm_userfaultfd_ctx;

} __randomize_layout;static int binder_mmap(struct file *filp, struct vm_area_struct *vma)

{ //根据filp找到binder_procstruct binder_proc *proc = filp->private_data;if (proc->tsk != current->group_leader)return -EINVAL;binder_debug(BINDER_DEBUG_OPEN_CLOSE,"%s: %d %lx-%lx (%ld K) vma %lx pagep %lx\n",__func__, proc->pid, vma->vm_start, vma->vm_end,(vma->vm_end - vma->vm_start) / SZ_1K, vma->vm_flags,(unsigned long)pgprot_val(vma->vm_page_prot));if (vma->vm_flags & FORBIDDEN_MMAP_FLAGS) {pr_err("%s: %d %lx-%lx %s failed %d\n", __func__,proc->pid, vma->vm_start, vma->vm_end, "bad vm_flags", -EPERM);return -EPERM;}//下面的操作就是把binder_proc保存到vm_area_structvma->vm_flags |= VM_DONTCOPY | VM_MIXEDMAP;vma->vm_flags &= ~VM_MAYWRITE;vma->vm_ops = &binder_vm_ops;vma->vm_private_data = proc;// mmap给binder_proc分配内存return binder_alloc_mmap_handler(&proc->alloc, vma);

}位置:kernel/common/drivers/android/binder.c/*** struct binder_buffer - buffer used for binder transactions* @entry: entry alloc->buffers* @rb_node: node for allocated_buffers/free_buffers rb trees* @free: %true if buffer is free* @clear_on_free: %true if buffer must be zeroed after use* @allow_user_free: %true if user is allowed to free buffer* @async_transaction: %true if buffer is in use for an async txn* @oneway_spam_suspect: %true if total async allocate size just exceed* spamming detect threshold* @debug_id: unique ID for debugging* @transaction: pointer to associated struct binder_transaction* @target_node: struct binder_node associated with this buffer* @data_size: size of @transaction data* @offsets_size: size of array of offsets* @extra_buffers_size: size of space for other objects (like sg lists)* @user_data: user pointer to base of buffer space* @pid: pid to attribute the buffer to (caller)** Bookkeeping structure for binder transaction buffers*/

// binder数据处理的缓冲区buffer

struct binder_buffer {struct list_head entry; /* free and allocated entries by address */struct rb_node rb_node; /* free entry by size or allocated entry *//* by address */unsigned free:1; //标记是否是空闲buffer,占位1bitunsigned clear_on_free:1;unsigned allow_user_free:1;// 是否允许用户释放unsigned async_transaction:1;unsigned oneway_spam_suspect:1;unsigned debug_id:27; //debugid占位27bitstruct binder_transaction *transaction; //该缓存区的需要处理的事务struct binder_node *target_node; //改缓存区所处理的binder实体size_t data_size; // 数据大小size_t offsets_size; // 数据偏移量size_t extra_buffers_size;void __user *user_data;int pid;

};/*** struct binder_alloc - per-binder proc state for binder allocator* @vma: vm_area_struct passed to mmap_handler* (invarient after mmap)* @tsk: tid for task that called init for this proc* (invariant after init)* @vma_vm_mm: copy of vma->vm_mm (invarient after mmap)* @buffer: base of per-proc address space mapped via mmap* @buffers: list of all buffers for this proc* @free_buffers: rb tree of buffers available for allocation* sorted by size* @allocated_buffers: rb tree of allocated buffers sorted by address* @free_async_space: VA space available for async buffers. This is* initialized at mmap time to 1/2 the full VA space* @pages: array of binder_lru_page* @buffer_size: size of address space specified via mmap* @pid: pid for associated binder_proc (invariant after init)* @pages_high: high watermark of offset in @pages* @oneway_spam_detected: %true if oneway spam detection fired, clear that* flag once the async buffer has returned to a healthy state** Bookkeeping structure for per-proc address space management for binder* buffers. It is normally initialized during binder_init() and binder_mmap()* calls. The address space is used for both user-visible buffers and for* struct binder_buffer objects used to track the user buffers*/

//binder虚拟空间的结构体

struct binder_alloc {struct mutex mutex;//锁struct vm_area_struct *vma;//虚拟空间结构体struct mm_struct *vma_vm_mm;void __user *buffer;//通过mmap映射的每个进程地址空间struct list_head buffers;struct rb_root free_buffers;struct rb_root allocated_buffers;size_t free_async_space;struct binder_lru_page *pages;size_t buffer_size;uint32_t buffer_free;int pid;size_t pages_high;bool oneway_spam_detected;

};/*** binder_alloc_mmap_handler() - map virtual address space for proc* @alloc: alloc structure for this proc* @vma: vma passed to mmap()** Called by binder_mmap() to initialize the space specified in* vma for allocating binder buffers** Return:* 0 = success* -EBUSY = address space already mapped* -ENOMEM = failed to map memory to given address space*/

int binder_alloc_mmap_handler(struct binder_alloc *alloc,struct vm_area_struct *vma)

{int ret;const char *failure_string;struct binder_buffer *buffer;mutex_lock(&binder_alloc_mmap_lock);if (alloc->buffer_size) {ret = -EBUSY;failure_string = "already mapped";goto err_already_mapped;}//分配4M内存alloc->buffer_size = min_t(unsigned long, vma->vm_end - vma->vm_start,SZ_4M);mutex_unlock(&binder_alloc_mmap_lock);//和虚拟地址进行映射alloc->buffer = (void __user *)vma->vm_start;//分配物理页的指针数组alloc->pages = kcalloc(alloc->buffer_size / PAGE_SIZE,sizeof(alloc->pages[0]),GFP_KERNEL);if (alloc->pages == NULL) {ret = -ENOMEM;failure_string = "alloc page array";goto err_alloc_pages_failed;}//给buffer分配内存buffer = kzalloc(sizeof(*buffer), GFP_KERNEL);if (!buffer) {ret = -ENOMEM;failure_string = "alloc buffer struct";goto err_alloc_buf_struct_failed;}buffer->user_data = alloc->buffer;// list_add(&buffer->entry, &alloc->buffers);// 1 表示内存可用buffer->free = 1;//将buffer插入到binder_alloc->free_buffers链表中binder_insert_free_buffer(alloc, buffer);//异步操作的内存为4/2Malloc->free_async_space = alloc->buffer_size / 2;//将binder_alloc和vm_area_struct绑定binder_alloc_set_vma(alloc, vma);mmgrab(alloc->vma_vm_mm);return 0;err_alloc_buf_struct_failed:kfree(alloc->pages);alloc->pages = NULL;

err_alloc_pages_failed:alloc->buffer = NULL;mutex_lock(&binder_alloc_mmap_lock);alloc->buffer_size = 0;

err_already_mapped:mutex_unlock(&binder_alloc_mmap_lock);binder_alloc_debug(BINDER_DEBUG_USER_ERROR,"%s: %d %lx-%lx %s failed %d\n", __func__,alloc->pid, vma->vm_start, vma->vm_end,failure_string, ret);return ret;

}4.binder_ioctl

位置:kernel/common/drivers/android/binder.c//binder的读写操作方法

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{int ret;struct binder_proc *proc = filp->private_data;struct binder_thread *thread;unsigned int size = _IOC_SIZE(cmd);void __user *ubuf = (void __user *)arg;/*pr_info("binder_ioctl: %d:%d %x %lx\n",proc->pid, current->pid, cmd, arg);*/binder_selftest_alloc(&proc->alloc);trace_binder_ioctl(cmd, arg);// 休眠状态,直到中断唤醒ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);if (ret)goto err_unlocked;/**根据当前的currend进程的pid,从binder_pro中查找binder_thread,*如果当前线程已经加入到binder_pro的线程队列中,则直接return,*如果不存在则创建binder_thread,然后添加到binder_pro中*/thread = binder_get_thread(proc);if (thread == NULL) {ret = -ENOMEM;goto err;}switch (cmd) {//读写命令case BINDER_WRITE_READ:ret = binder_ioctl_write_read(filp, cmd, arg, thread);if (ret)goto err;break;// 设置binder最大线程数case BINDER_SET_MAX_THREADS: {int max_threads;if (copy_from_user(&max_threads, ubuf,sizeof(max_threads))) {ret = -EINVAL;goto err;}binder_inner_proc_lock(proc);proc->max_threads = max_threads;binder_inner_proc_unlock(proc);break;}// binder->servicemanagercase BINDER_SET_CONTEXT_MGR_EXT: {struct flat_binder_object fbo;if (copy_from_user(&fbo, ubuf, sizeof(fbo))) {ret = -EINVAL;goto err;}ret = binder_ioctl_set_ctx_mgr(filp, &fbo);if (ret)goto err;break;}case BINDER_SET_CONTEXT_MGR:ret = binder_ioctl_set_ctx_mgr(filp, NULL);if (ret)goto err;break;...

}位置:kernel/common/drivers/android/binder.c//binder读写结构体

struct binder_write_read {binder_size_t write_size;//大小binder_size_t write_consumed;//binder_uintptr_t write_buffer;//缓冲binder_size_t read_size;binder_size_t read_consumed;binder_uintptr_t read_buffer;

};static int binder_ioctl_write_read(struct file *filp,unsigned int cmd, unsigned long arg,struct binder_thread *thread)

{int ret = 0;struct binder_proc *proc = filp->private_data;unsigned int size = _IOC_SIZE(cmd);void __user *ubuf = (void __user *)arg;struct binder_write_read bwr;if (size != sizeof(struct binder_write_read)) {ret = -EINVAL;goto out;}// 把用户空间的数据ubuf拷贝到bwrif (copy_from_user(&bwr, ubuf, sizeof(bwr))) {ret = -EFAULT;goto out;}binder_debug(BINDER_DEBUG_READ_WRITE,"%d:%d write %lld at %016llx, read %lld at %016llx\n",proc->pid, thread->pid,(u64)bwr.write_size, (u64)bwr.write_buffer,(u64)bwr.read_size, (u64)bwr.read_buffer);//当写缓冲中有数据,执行binder的写操作if (bwr.write_size > 0) {ret = binder_thread_write(proc, thread,bwr.write_buffer,bwr.write_size,&bwr.write_consumed);trace_binder_write_done(ret);if (ret < 0) {bwr.read_consumed = 0;if (copy_to_user(ubuf, &bwr, sizeof(bwr)))ret = -EFAULT;goto out;}}//当读缓冲中有数据,执行binder的读操作if (bwr.read_size > 0) {ret = binder_thread_read(proc, thread, bwr.read_buffer,bwr.read_size,&bwr.read_consumed,filp->f_flags & O_NONBLOCK);trace_binder_read_done(ret);binder_inner_proc_lock(proc);//当todo队列不为空,唤醒该队列中的线程,默认是同步线程if (!binder_worklist_empty_ilocked(&proc->todo))binder_wakeup_proc_ilocked(proc);binder_inner_proc_unlock(proc);if (ret < 0) {if (copy_to_user(ubuf, &bwr, sizeof(bwr)))ret = -EFAULT;goto out;}}binder_debug(BINDER_DEBUG_READ_WRITE,"%d:%d wrote %lld of %lld, read return %lld of %lld\n",proc->pid, thread->pid,(u64)bwr.write_consumed, (u64)bwr.write_size,(u64)bwr.read_consumed, (u64)bwr.read_size);//把内核空间的数据bwr拷贝到ubufif (copy_to_user(ubuf, &bwr, sizeof(bwr))) {ret = -EFAULT;goto out;}

out:return ret;

}